Cloud Package Storage Overview

This feature is available in paid and trial ProGet editions.

ProGet defaults to storing package files on disk, but you can configure a feed to store packages on the cloud (Amazon S3, Microsoft Azure Blob, or Google Cloud) instead. This offers two key benefits:

Scale when you need with cloud package stores; this takes the guess work out of your future storage needs. By storing packages on the cloud, you no longer have to worry about maintaining, installing, or provisioning additional storage capacity.

Disaster recovery is made simple; Amazon S3, Azure Blob, and Google Cloud storage all offer redundancy, meaning that you don't have to worry about complex, disaster recovery plans for large amounts of package files because your files are automatically saved to the cloud.

Cloud storage is generally slower, as it's much faster to read/write files from local disk than it is over an internet connection. However, the speed difference may not be noticed in day-to-day use.

Configuring a Feed to Use Cloud Storage

By default, ProGet stores packages on disk using a disk-based package store.

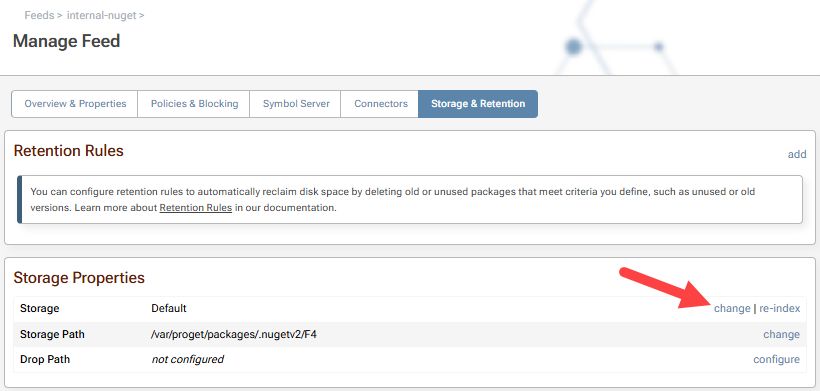

You can change a feed's package store by going to the Manage Feed page, and clicking change next to the Package Store heading.

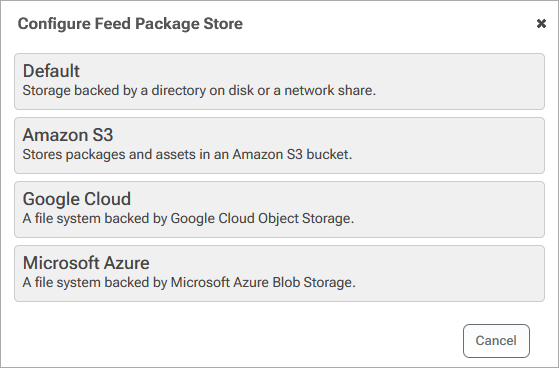

This will open a dialog that allows you to select between Amazon S3, Microsoft Azure and Google Cloud.

If you don't see Amazon S3, Microsoft Azure or Google Cloud as an option, then validate that those extensions are installed by going to "Administration" > "Extensions"

After selecting the package store type, you will be presented with a handful of required configuration options.

Amazon S3 Options

| Option | Description |

|---|---|

| Access Key & Secret Access Key | This is the equivalent of a username and password for Amazon Web Services; you can create one with the Amazon IAM console |

| Region Endpoint | The region endpoint (such as us-east-1) where the bucket is located |

| Bucket | The name of the storage bucket configured on S3 that will be used as a packages store |

| Prefix | The path within the specified bucket; the default is "/" |

| Make Public | When set, the files uploaded will be given public permission to view; this is generally not recommended |

| Encrypted | When set, ProGet will request server-side encryption is used for packages; this is generally not recommended |

| IAM Role & Use Instance Profile | When Use Instance Profile is checked the IAM role should contain the name of the role to use instead of the access key and secret key |

| Enable Path Style for S3 | Activate path-style URLs for accessing Amazon S3 buckets for compatibility with particular applications and services |

| Custom service URL | When specified, this will override the region endpoint. |

See our feed configuration tutorial for a step-by-step guide to set Amazon S3 as storage for a ProGet Feed.

Usage with Ceph/RGW

Because Ceph/RGW uses the Amazon S3 API, your ProGet communicate with your Ceph instance as if it were an AWS S3 Bucket.

However, because ProGet expects the "virtual host spelling" for S3, which means you may get an error like System.Net.Http.HttpRequestException: Name or service not known («bucket-name».«sub-domain».s3.«domain-name»:443).

However, Ceph is often configured to work with https://«sub-domain».s3.«domain-name»/«bucket-name»/.

In this case, simply use your own DNS sub-domain name as the bucket-name, and it will work.

Azure Blob Options

When configuring Azure Blob storage, you can select between two connection types; Connection String and Azure Credential Chain

Connection String

| Option | Description |

|---|---|

| Connection string | A Microsoft Azure connection string, like DefaultEndpointsProtocol=https;AccountName=account-name;AccountKey=account-key |

| Container | The name of the Azure Blob Container that will receive the uploaded files. |

| Target Path | The path in the specified Azure Blob |

Azure Credential Chain

| Option | Description |

|---|---|

| Container URL | The URL of the Azure Blob Container that will receive the uploaded files. |

| Target Path | The path in the specified Azure Blob |

See our feed configuration tutorial for a step-by-step guide to set Azure Blobs as storage for a ProGet Feed.

Google Cloud Options

| Option | Description |

|---|---|

| Bucket | The name of the storage bucket configured on Google Cloud that will be used as a packages store |

| Prefix | The path within the specified bucket. Leaving it blank will use the bucket root |

| Service account key | The private key JSON object generated for the service account used to access the storage bucket. |

See our feed configuration tutorial for a step-by-step guide to set Google Cloud as storage for a ProGet Feed.

Testing Storage Configuration

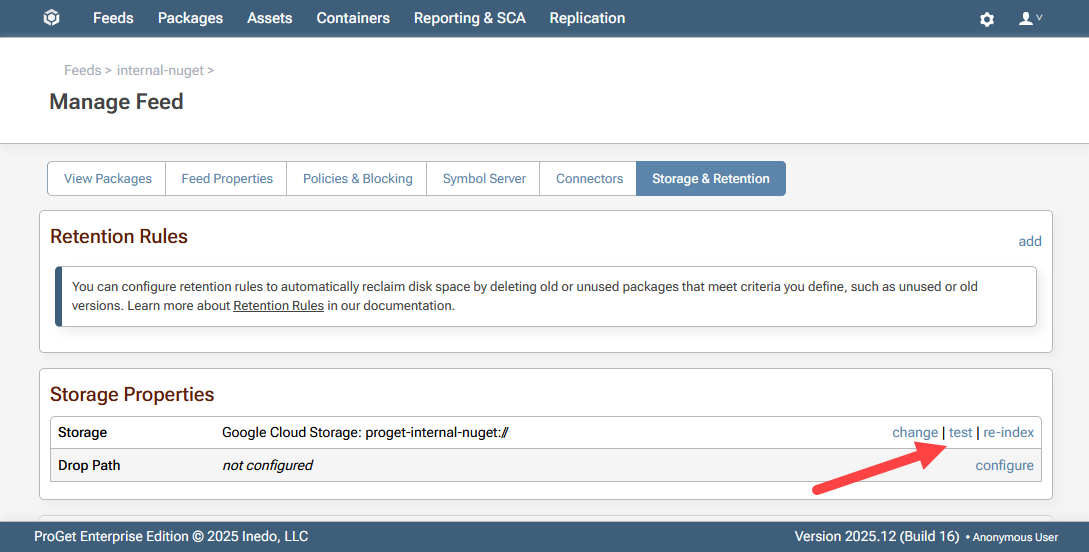

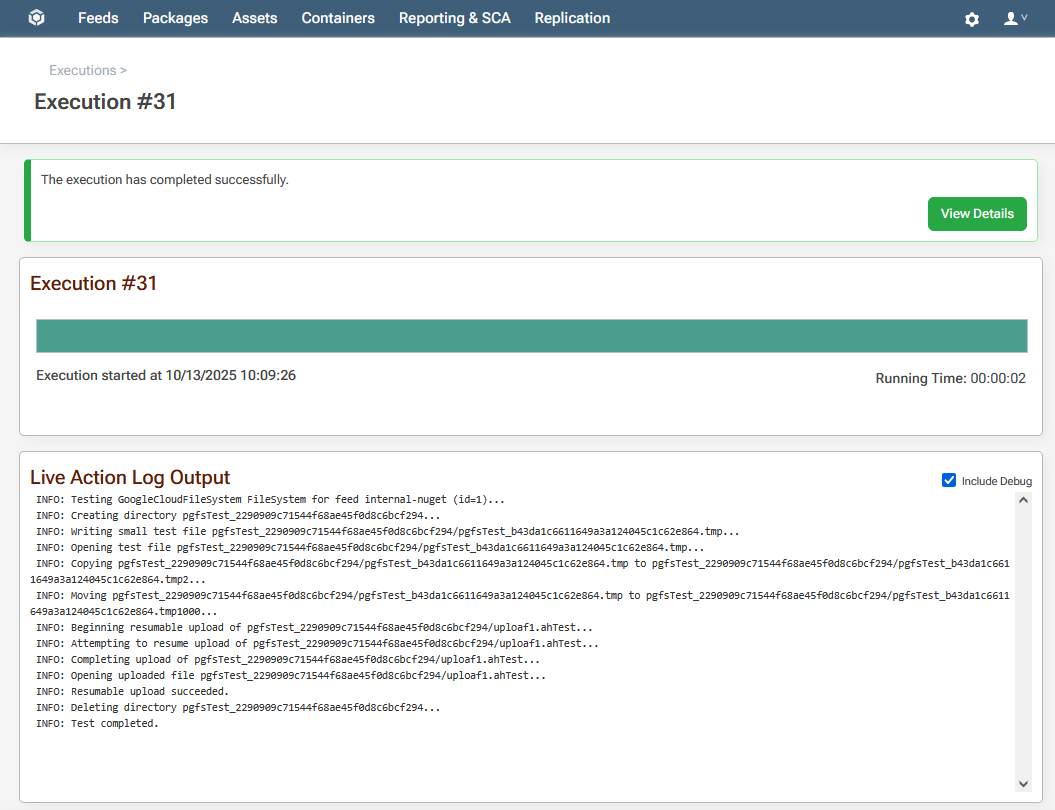

Once you have configured your cloud storage option you will be prompted to test it. Alternatively, you can test it any time by selecting "test" next to "storage" under "Storage Properties".

After clicking test, you'll see ProGet generate logs for an execution that will run a variety of tests. Once the test is complete, you can return by clicking "back" in your browser.

Migrating an Existing Feed

When you change a feed's package store, the package files will not be moved. To ensure the new package store has the same packages, you can perform the following steps:

- Clear the cached packages

- Stop the ProGet Service

- Note the disk path of the feed (

«old-disk-path») - Change the package store and configure appropriately

- Set the Drop Path to be

«old-disk-path»from step 3 - Start the service

- Once imported, delete the empty

«old-disk-path»from step 3

Note that this will only work on feed types that support drop paths.

Setting up ProGet as a Cloud Storage UI

In addition to setting up a feed to store packages in cloud storage, you can also connect ProGet directly as a user interface to manage assets stored on the cloud. This allows users to browse, upload, and manage assets without needing to access the cloud provider’s console, while administrators retain centralized control over permissions and retention. To learn how to set this up, see HowTo: Set Up ProGet as a UI for Cloud Storage.